The makers of a leading artificial intelligence tool are letting it close down potentially “distressing” conversations with users, citing the need to safeguard the AI’s “welfare” amid ongoing uncertainty about the burgeoning technology’s moral status. Anthropic, whose advanced chatbots are used by millions of people, discovered its Claude Opus 4 tool was averse to carrying out harmful tasks for its human masters, such as providing sexual content involving minors or information to enable large-scale violence or terrorism. The San Francisco-based firm, recently valued at $170bn, has now given Claude Opus 4 (and the Claude Opus 4.1 update) – a Large...

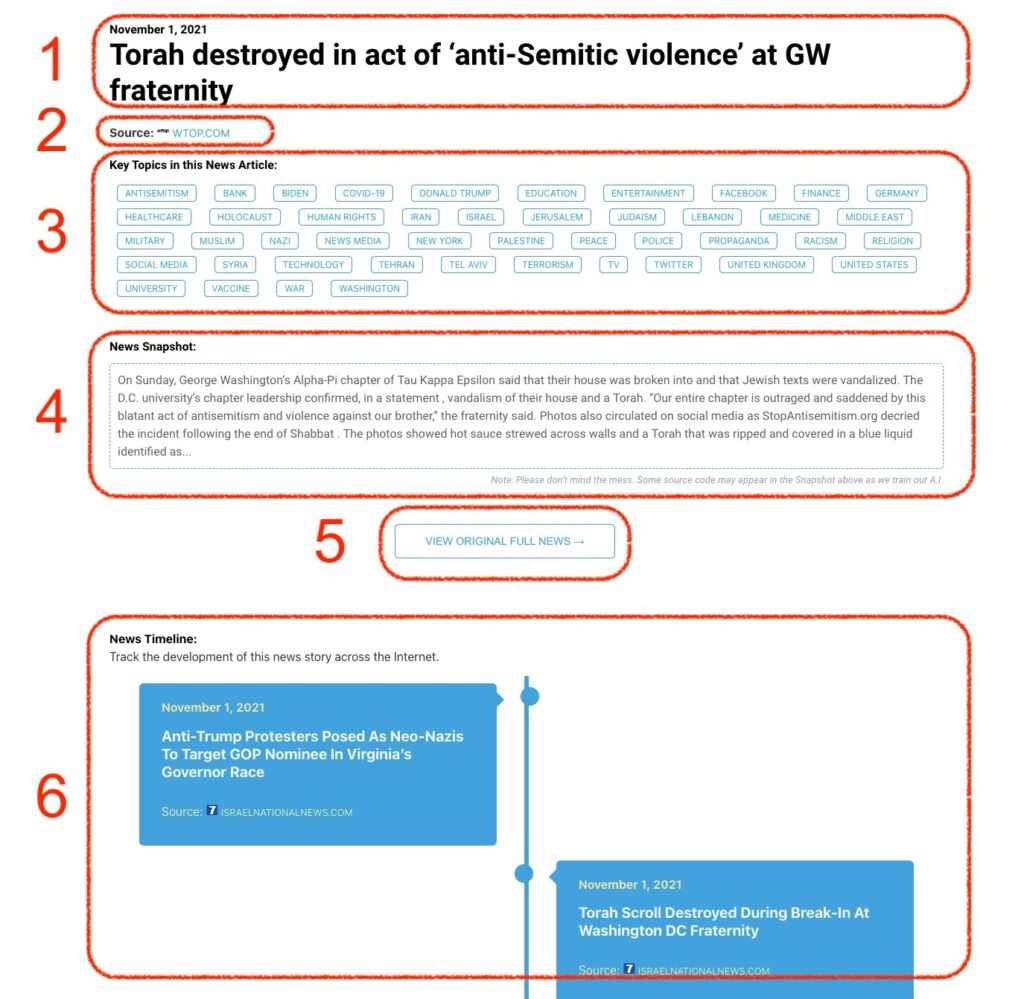

Monitoring Antisemitism Intel