Computer scientists have found that artificial intelligence (AI) chatbots and large language models (LLMs) can inadvertently allow Nazism, sexism and racism to fester in their conversation partners. When prompted to show empathy, these conversational agents do so in spades, even when the humans using them are self-proclaimed Nazis. What's more, the chatbots did nothing to denounce the toxic ideology. The research, led by Stanford University postdoctoral computer scientist Andrea Cuadra , was intended to discover how displays of empathy by AI might vary based on the user's identity. The team found that the ability to mimic empathy was a double-edged...

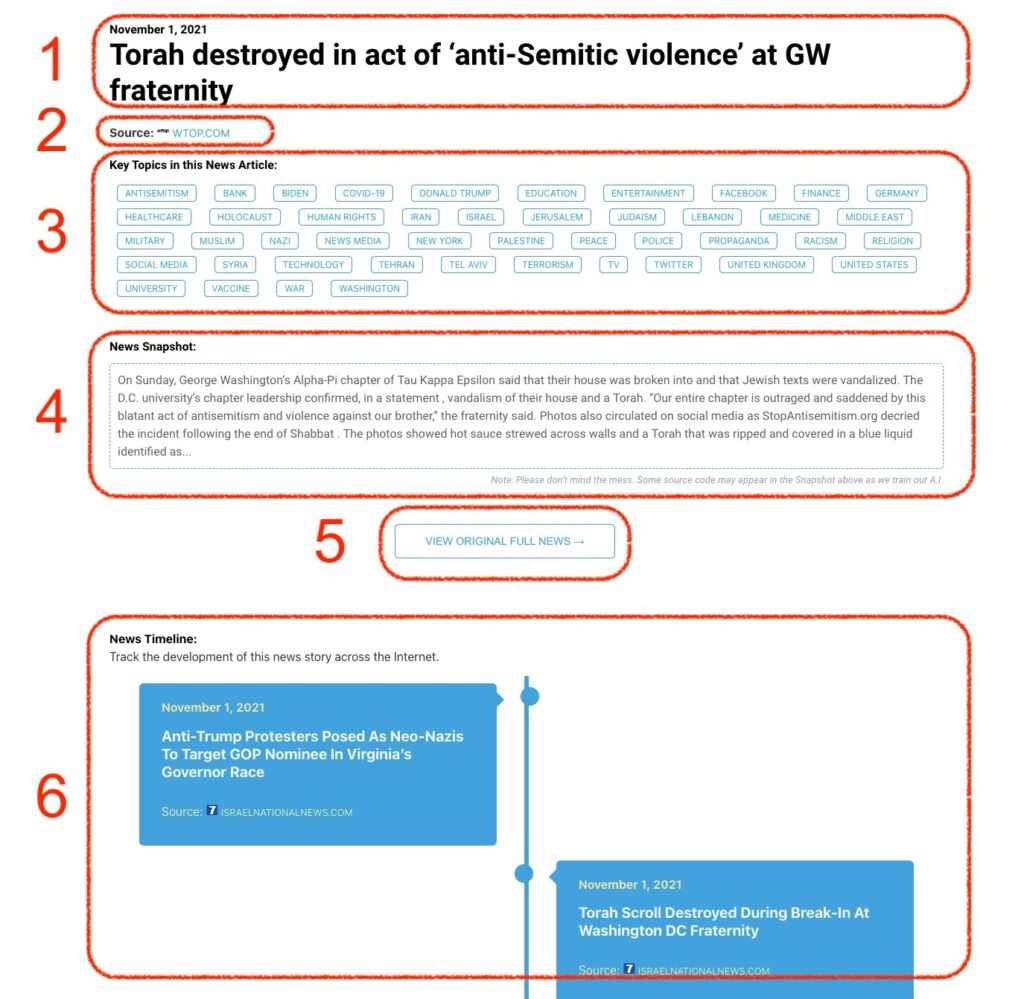

Monitoring Antisemitism Intel